TL;DR Science Artificial Intelligence Bias

By Thomas P.

February 03, 2021 · 3 minute read

Despite the term 'artificial intelligence,' machines are actually quite primitive. While a computer can process and store information at astounding rates, it turns out that common everyday situations like reading emotions or noticing sarcasm are complicated to code into a machine. Machines and the artificial intelligence programs we create are only as smart as we make them. In this sense, we can consider AI to be similar to children in that we have to teach the computer everything about the world.

Google's AI BERT

Despite the misperception that AI is neutral, it turns out that because AI is made by humans, it is just like them. Recent Artificial Intelligence made by Alphabet (Google's parent company) has come under scrutiny. The company’s AI technology named BERT had apparently learned by reading many Wikipedia articles, century-old books, and newspaper articles. All sources could be bound to have some bias. For example, century-old books could use terms that are not considered politically correct to refer to women or minorities; likewise, Wikipedia articles contain user-generated content. Finally, newspaper articles could have political leanings based on the source. In an article in the New York Times, Robert Munro, a computer scientist, found that when BERT was given 100 random words, 99 were associated with Men instead of Women. It is possible that the aforementioned biased sources, of which many may have been written by men (such as the century-old books), skewed BERT's responses to associate these words with men instead of women.

C.O.M.P.A.S.

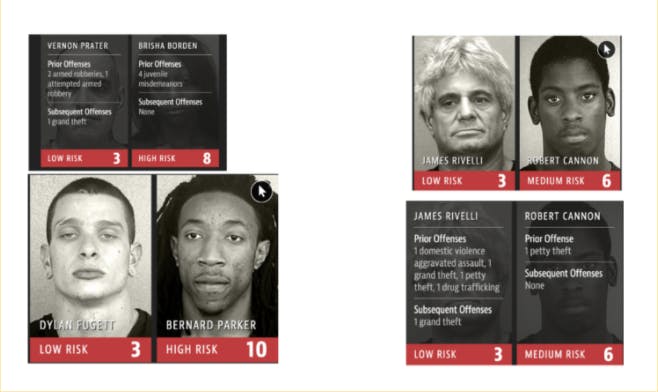

Another example of racial bias is a program called C.O.M.P.A.S., which was created to evaluate the probability of selected criminals becoming subsequent offenders. Examples of the program’s identification include the following:

Taking a look at the left picture, the system identified the African-American male as a “high risk” individual, although he had no prior offenses as an adult. On the other hand, the system identified his white counterpart as lower risk, although he had more criminal activity. So what are the implications of this? Given C.O.M.P.A.S. was used to rate selected criminals based on their threat (risk) to their community, it is possible that this could be used to determine either the bail amount in court and possible sentence outcomes. Both of these could determine someone’s time in jail and prison.

ImageNet

Another example of racially biased facial recognition AI includes a technology called “ImageNet,” which tended to misrecognize images when fed an image of a person from a certain race. For example, ImageNet, when fed pictures of programmers, were more likely to assume that they were white because of the images being racially skewed. This trend of Artificial Intelligence being biased towards people of lighter complexion is even more noticeable when we look at how AI identifies those with different skin colors. Often, the pictures used to train artificial intelligence programs are dominantly white. This leads to the misidentification of other ethnicities. For example, in an extreme case, an AI program accidentally identified an African-American as a Gorilla, which evidently shows the serious consequences of the overuse of white faces. This caused scientists to undertake the task of diversifying the image set to make the software less biased.

And there you have it. Three examples of where AI was not trained effectively and ended up with significant biases in the final products. It is important for scientists and programmers that work on these machines in the future to understand the source of possible biases and work to eliminate them. While AI holds much potential for growth, handling the technology in a responsible way will be key to using the technology for the future.

TL;DR AI has similar biases to those that its creators (humans) have. Developers of this new technology need to be cognizant of the possible limitations of AI when using it to help make decisions.

Sources:

- https://www.nytimes.com/2019/11/11/technology/artificial-intelligence-bias.html?action=click&module=RelatedLinks&pgtype=Article

- https://www.nytimes.com/2020/12/09/technology/timnit-gebru-google-pichai.html?searchResultPosition=2

- https://www.nytimes.com/2018/02/09/technology/facial-recognition-race-artificial-intelligence.html?action=click&module=RelatedLinks&pgtype=Article

- https://www.wired.com/story/ai-biased-how-scientists-trying-fix/

- https://towardsdatascience.com/racist-data-human-bias-is-infecting-ai-development-8110c1ec50c

Did you enjoy this article?

About The Author

Thomas is a high school student at Eastside High.